Listen to the Podcast

26 July 2024 - Podcast #892 - (21:39)

It's Like NPR on the Web

If you find the information TechByter Worldwide provides useful or interesting, please consider a contribution.

If you find the information TechByter Worldwide provides useful or interesting, please consider a contribution.

This is an election year and it doesn’t take a genius to expect an onslaught of fake images, manipulated videos, and lies. The threat increases every day that people will make important decisions that are based on these falsehoods.

Lying with photographs used to be difficult. In Stalin’s Soviet Union, people who had been liquidated were removed from photographs by means of physical manipulation. Photos were cut and reassembled, the photograph was photographed, and the resulting image was manipulated using an airbrush. Even the best efforts were clearly visible. Removing someone from an image today is trivial, a task that can be accomplished with many smart phones.

Just a few years ago making a video that purported to show a drunk Nancy Pelosi slurring her words required slowing the video. It was a clumsy effort that was easily debunked, but a lot of people believed it. Today it would be easy using readily available AI tools to manipulate both the video and the audio.

Click any small image for a full-size view. To dismiss the larger image, press ESC or tap outside the image.

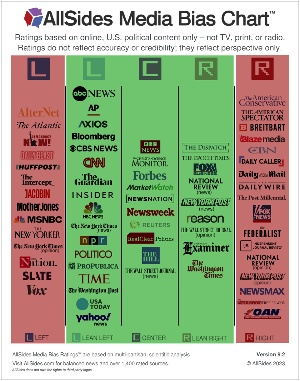

How can we protect ourselves from being fooled? One of the most effective methods involves thinking about the source of a photo or video. Two resources are helpful. The AllSides Media Bias Chart and Media Bias / Fact Check can help with information about bias and accuracy.

How can we protect ourselves from being fooled? One of the most effective methods involves thinking about the source of a photo or video. Two resources are helpful. The AllSides Media Bias Chart and Media Bias / Fact Check can help with information about bias and accuracy.

Information sourced from Reuters, ABC News, NPR, Fox Business, and the Washington Examiner can be treated as less biased, while items from Fox News, Breitbart, MSNBC, and AlterNet need to be treated more with more skepticism.

Trust people who know what they’re talking about. If I have a question about plumbing, I’ll ask a plumber, not an epidemiologist. But if I want advice on which vaccines I need and when, I’ll depend on the epidemiologist instead of the plumber. When someone is a recognized expert in a field where they have several decades of experience, they’re more likely to have useful, factual information than is the person who thought he was an expert on constitutional law last week, thought he was an expert on aerodynamics the week before that, and now thinks he’s an expert on reasons the earth must be flat.

When it comes to images, just looking closely can help. Manipulation, especially if it’s been poorly done will exhibit clear indications. Examine edges and backgrounds carefully. AI is smart enough to avoid creating repeating patterns most of the time, but that’s one telltale sign of manipulation that’s been done clumsily with the clone tool. Several years ago, North Korea released a photo of a rocket launch. The launch had failed, but the manipulated photo made it appear to have been successful until observers called out the repeating patterns in part of the image. AI eliminates a lot of the obvious flaws and the images often have low resolution, which makes closer looks difficult. Sometimes you can just trust your senses. If something looks wrong about an image, even if you can’t say exactly what it is, proceed with care.

An image of a cat standing on a stage, singing into a microphone is obviously not a real cat standing on a real stage. The stage is a table, but if the table is in what appears to be a subway station and it appears to be the same height as the platform even though it’s in the track area, it’s not real, either. Nor is the other cat on the platform. And there are lots of other problems that clearly mark the image as fake.

An image of a cat standing on a stage, singing into a microphone is obviously not a real cat standing on a real stage. The stage is a table, but if the table is in what appears to be a subway station and it appears to be the same height as the platform even though it’s in the track area, it’s not real, either. Nor is the other cat on the platform. And there are lots of other problems that clearly mark the image as fake.

But what about a cat with a speech bubble and sunglasses. What’s manipulated here? Obviously the cat didn’t say the word represented in the speech bubble because cats don’t speak in American English. The cat is real, but the sunglasses are fake, even though it would be possible to place sunglasses on a cat. These are entirely AI. But what else is fake? The background on the right is real, but I expanded the image on the left to have room for the speech bubble. Everything from the cat’s whiskers to the left edge of the image is AI.

But what about a cat with a speech bubble and sunglasses. What’s manipulated here? Obviously the cat didn’t say the word represented in the speech bubble because cats don’t speak in American English. The cat is real, but the sunglasses are fake, even though it would be possible to place sunglasses on a cat. These are entirely AI. But what else is fake? The background on the right is real, but I expanded the image on the left to have room for the speech bubble. Everything from the cat’s whiskers to the left edge of the image is AI.

Sometimes an image simply shrieks about manipulation. (Sorry about the profanity on the fake sign.) It would cost hundreds of dollars, if not thousands, to create a manipulated Burger King sign and no restaurant owner would make the situation worse with the words below the sign. If you look closely, you’ll see that the letters are clearly manipulated. Yet this image was posted to a Facebook group that’s supposedly about poorly made real signs. Early responses indicated that nearly 100% of those who commented recognized it as a phony.

Sometimes an image simply shrieks about manipulation. (Sorry about the profanity on the fake sign.) It would cost hundreds of dollars, if not thousands, to create a manipulated Burger King sign and no restaurant owner would make the situation worse with the words below the sign. If you look closely, you’ll see that the letters are clearly manipulated. Yet this image was posted to a Facebook group that’s supposedly about poorly made real signs. Early responses indicated that nearly 100% of those who commented recognized it as a phony.

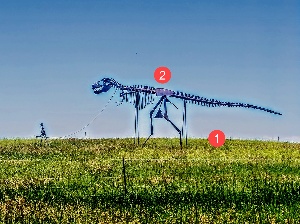

You may think you’ve spotted a phony image but find that it’s real. A metal sculpture of a human skeleton walking a dinosaur skeleton is 100% real and it’s located in South Dakota. The (1) fringing and (2) color banding are not signs of photo fakery, but simply indicate incompetent JPEG processing.

You may think you’ve spotted a phony image but find that it’s real. A metal sculpture of a human skeleton walking a dinosaur skeleton is 100% real and it’s located in South Dakota. The (1) fringing and (2) color banding are not signs of photo fakery, but simply indicate incompetent JPEG processing.

A subreddit, BadPhotoshop, is a good resource if you want to learn more about spotting fakes.

Some of the clues to look for in images: (1) AI often likes to place lights in the background. This isn’t a clear indicator of fakeness, but it’s something to watch for. Sometimes something obvious is missing, such as a (2) sink that has no water faucets. Look for (3) random objects in the background that seem unrelated or out of place. Lighting is often too good and you’ll see (4) detail in both near-white areas and near-black areas. Maybe you’ll find an object that (5) “just doesn’t look right”.

Some of the clues to look for in images: (1) AI often likes to place lights in the background. This isn’t a clear indicator of fakeness, but it’s something to watch for. Sometimes something obvious is missing, such as a (2) sink that has no water faucets. Look for (3) random objects in the background that seem unrelated or out of place. Lighting is often too good and you’ll see (4) detail in both near-white areas and near-black areas. Maybe you’ll find an object that (5) “just doesn’t look right”.

When there’s a (1) URL or domain name on the image, try going to the website because you might find that the site is dedicated to “Science Fiction and Fantasy Come True”. In that case, you need go no further. But if you’re still not sure, look for (2) transitions from sharp focus to blurred that are too rapid or (3) designs that would not be permitted in the real world because, for example, they would make it impossible to operate something safely — maybe a tram with a large object that would severely block the operator’s forward view.

When there’s a (1) URL or domain name on the image, try going to the website because you might find that the site is dedicated to “Science Fiction and Fantasy Come True”. In that case, you need go no further. But if you’re still not sure, look for (2) transitions from sharp focus to blurred that are too rapid or (3) designs that would not be permitted in the real world because, for example, they would make it impossible to operate something safely — maybe a tram with a large object that would severely block the operator’s forward view.

This may seem all too much like Spy vs. Spy (the old Mad Magazine feature). Or the battle between police and the manufacturers of radar detectors. AI is needed to create convincing fakes that are hard for people to identify. But AI can also be used to spot the fakes. These days you don’t have to be a skilled artist or a skilled videographer to create phony photos and videos. As of today, AI does a good job spotting the phonies, but the technology continues to improve. Adobe has shown some astonishing technology, some of which is already available, that offers real-world benefits, but also can be misused. That’s true of any tool, of course, and there’s no putting the djini back in the bottle. We just need to become more adept at identifying the work of those who would fool us for their own benefit.

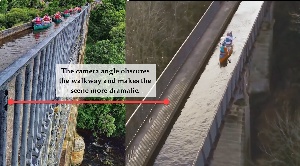

The manipulation might not involve a computer at all. The longest viaduct in the United Kingdom is in Wales. A photo that’s intended to be dramatic makes the viaduct appear much more narrow than it actually is and the angle obscures a walkway. A second photo from above (and looking the other direction) more accurately. Visitors can walk across the viaduct, canoe across it, or ride a canal boat.

The manipulation might not involve a computer at all. The longest viaduct in the United Kingdom is in Wales. A photo that’s intended to be dramatic makes the viaduct appear much more narrow than it actually is and the angle obscures a walkway. A second photo from above (and looking the other direction) more accurately. Visitors can walk across the viaduct, canoe across it, or ride a canal boat.

Sometimes we can learn more about a photo by finding out where it has been displayed. The RevEye Reverse Image Search plug-in is available for Chrome-based browsers as well as for Firefox. RevEye submits an image to Google Lens, Bing, Yandex (a Russian search engine), and TinEye.

Sometimes we can learn more about a photo by finding out where it has been displayed. The RevEye Reverse Image Search plug-in is available for Chrome-based browsers as well as for Firefox. RevEye submits an image to Google Lens, Bing, Yandex (a Russian search engine), and TinEye.

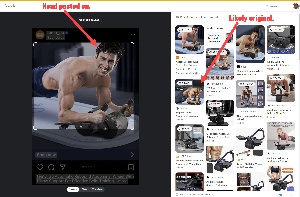

Somebody created an unconvincing image of someone exercising in a gym. If you think the head “just looks wrong”, you’re right. The depth of field is wrong. The head is clearly a cutout. The shadows aren’t right. Using the Reverse Image Search plug-in, I found another photo that’s probably from the same photo shoot. Not the exact image used for the fake ad, but one likely taken during the same session.

State-sponsored meme makers in North Korea, China, and Russia are doing everything in their power to create additional divisions in the west in general and the United States in particular. Phony images, rumors, and fake stories are their ammunition and they are quite good at it. We need to be equally good when it comes to identifying the lies. Let's consider some additional strategies and resources in Short Circuits.

When you see an image that’s designed to enrage, maybe it’s a good idea to stop for a moment and check it out. If you share images with others, there’s a good chance that you’ve passed on a fraudulent image at some point.

I have done this occasionally when an image aligned with my biases or when I believed that the persons I received an image from would have checked its validity. Something like three billion images are uploaded to the internet every day and a surprising number contain misinformation or disinformation.

Sometimes it’s as simple as image being cropped to exclude important information or misrepresentation about the location of a legitimate image. In other cases, the image has been manipulated or created entirely by AI.

An article in Science Direct by Eryn Newman and Norbert Schwarz notes that any image can be used to lie, accidentally or intentionally, and describes five common ways images are used to tell the truth or to lie:

The article explains why identifying fake images is difficult: Slanted, repurposed, and decorative images can only be identified by carefully considering their relation to the text. Slanted and repurposed photos are particularly challenging. Spotting fake images is easier, requires only that the viewer look for signs of tampering, such as in the quality of the alterations. The article notes research found that “AI-generated White faces seem more real than White human faces and that AI faces of other races are indistinguishable from human faces.”

The research says that people think they can identify fake photos when they actually perform poorly. The problem is more difficult for older adults, the researchers say, and while performance can be improved through training, AI continues to improve, making the process even harder. Fortunately, AI analysis outperforms human discernment, at least for now.

Among the most troubling of the findings is growing concerns about deep fakes may cause people to doubt all images. “While such distrust can improve critical reasoning and identification of misleading information, it also undermines trust in the media and impairs the beneficial societal functions of trust in institutions,” according to Newman and Schwarz.

To avoid the situation known as “throwing the baby out with the dish water”, it’s important for humans to improve their ability to spot fakery and to use tools that can assist. Here are some.

ExifInfo reads information that’s stored in the image file, if the information is still there. It’s important to know that EXIF (exchangeable image file format) and IPTC (International Press Telecommunications Council) data can be modified or removed, but these sources are still a good way to get hints about when and where a photo originated.

Click any small image for a full-size view. To dismiss the larger image, press ESC or tap outside the image.

Foto Forensics includes EXIF and IPTC information and adds error level analysis (ELA) to highlight parts of a picture that were added to it after editing. After processing a photo, the program produces the image with edited parts illustrated.

Foto Forensics includes EXIF and IPTC information and adds error level analysis (ELA) to highlight parts of a picture that were added to it after editing. After processing a photo, the program produces the image with edited parts illustrated.

A friend posted a photo of an image he had taken of some mushrooms. He mentioned that no Smurfs were present, so I downloaded the image, added Papa Smurf, and uploaded it again with a note that he needed to look closer. When I gave the image to Foto Forensics and selected the ELA function, it clearly indicated that the Smurf character had been added to the original photo.

Here’s how it works: JPEG’s lossy compression is normally applied uniformly to the image, so the level of compression artifacts is expected to be consistent across the image. When something is added from another photograph, the compression artifacts may differ. If they do, the ELA analysis can make them visible.

This works only with lossy images, so it’s useless with TIFF, BMP, or PNG images that do not apply lossy compression. GIF images, which don’t have the artifacting associated with JPEG images, also cannot be analyzed using ELA because the color space used in GIF images will be 256 or fewer colors.

Just as protective software won’t totally protect computer users from malware, hackers, phishing, and other threats, we can’t depend on tricks, software, and artificial intelligence to protect us from deep-fake images and videos. Not being fooled by fakery depends on human brainpower.

One additional set of steps we can take to identify fakes, particularly deep fakes that can be nearly impossible to detect by spotting inconsistencies and oddities relies on the acronym SIFT (Stop, Investigate the source, Find better coverage, and Trace the original context).

We all suffer from what’s called confirmation bias. When we see an image, a video, or some text that coincides with our world view, we tend to believe it. Maybe you’ve seen a photo that claims to show Donald Trump being pushed to the ground and arrested by uniformed police officer. Of course it was a fake, but some people passed it on as being an accurate depiction of an actual event.

That’s where SIFT comes in. Currently we rely far too much on telltale signs of manipulation. I’ve mentioned those previously, but the software is improving and we can’t depend on spotting an anomaly. I’ve seen some of what Adobe is working on, applications that have legitimate reasons for existing, but that can be used to make images and videos that lie convincingly.

In the end, it comes down to you. We all need to take care when we share information. Being careful doesn’t mean you’ll never make a mistake and share something that was created in Moscow, Beijing, or Pyongyang, but it does give you a fighting chance.

TechByter Worldwide is no longer in production, but TechByter Notes is a series of brief, occasional, unscheduled, technology notes published via Substack. All TechByter Worldwide subscribers have been transferred to TechByter Notes. If you’re new here and you’d like to view the new service or subscribe to it, you can do that here: TechByter Notes.