Listen to the Podcast

7 Jun 2024 - Podcast #885 - (17:59)

It's Like NPR on the Web

If you find the information TechByter Worldwide provides useful or interesting, please consider a contribution.

If you find the information TechByter Worldwide provides useful or interesting, please consider a contribution.

I’ll probably never see a candy bar that claims to have artificial intelligence, but that’s only because I don’t buy candy bars, not because nobody has considered adding the term. Not everything with an AI label has any intelligence, artificial or otherwise.

Well implemented AI can be little short of astounding, but poorly implemented AI or fake AI is far beyond simply disappointing. AI seems to be everywhere, so now the challenge is to separate the good from the bad, the useful from the useless.

Click any small image for a full-size view. To dismiss the larger image, press ESC or tap outside the image.

One example: (A) Google AI, which is a significant, new, and evolving feature in Pixel phones. The company calls it “Gemini”. Google introduced it in mid 2023, but users didn’t start to see it until the end of the year. Some of the more powerful features, require the latest model phone and that’s currently the Pixel 8 Pro. Google says the features will be available on the less expensive Pixel 8 later this year, but apparently not on Pixel 7 devices or earlier.

One example: (A) Google AI, which is a significant, new, and evolving feature in Pixel phones. The company calls it “Gemini”. Google introduced it in mid 2023, but users didn’t start to see it until the end of the year. Some of the more powerful features, require the latest model phone and that’s currently the Pixel 8 Pro. Google says the features will be available on the less expensive Pixel 8 later this year, but apparently not on Pixel 7 devices or earlier.

Gemini can do some remarkable things. I had just eaten breakfast and had an orange peel on a plate. (B) I took a picture of it and asked Gemini to tell me what it was. “The image you sent me shows a single orange sitting on a white plate. The orange is a citrus fruit that is round and has a bright orange rind. The flesh of the orange is segmented and juicy, and it has a sweet and tart flavor. Oranges are a good source of vitamin C and other nutrients.” I’m not sure whether I should be impressed because it identified a partial image of an orange peel as an orange or disappointed because it didn’t tell me the orange had been eaten. The description, though, is accurate.

If you see something you’d like more information about, use Gemini to take a picture of it and ask a question. (C) I saw an unusual building in a Facebook post, but there wasn’t any information about where or what the building is. (D) I circled the image and Gemini returned a website link and additional information that said the building is a church in North Carolina.

One more: I took a picture of a can of (E) Head Hunter IPA and asked where I could buy it. Gemini told me it was not programmed to provide that information. When I tried again and asked where the beer is brewed, Gemini said “The can in the image is Fat Head’s Head Hunter IPA, which is brewed in Middleburg Heights, Ohio, by Fat Head’s Brewery.”

Microsoft has similar functionality called “Lens”, and other AI systems can perform similar tasks, not to call them tricks.

Google’s most remarkable technology on its phones is centered on photography, though. No photographer would ever use a phone for an event such as a wedding, journalism, or any big event unless something happened to their real cameras. But a smartphone in the hands of an inexperienced photographer makes it possible for that person to capture surprisingly good images.

Google’s most remarkable technology on its phones is centered on photography, though. No photographer would ever use a phone for an event such as a wedding, journalism, or any big event unless something happened to their real cameras. But a smartphone in the hands of an inexperienced photographer makes it possible for that person to capture surprisingly good images.

Consider a photo of Holly Cat with her favorite toy. When I took the photo, the phone was tilted a bit and there was a pen in the lower right corner. A slight bit of rotation fixed the tilt and cropping improved the composition. But then I circled the pen and told the phone to remove it. All of that work was done one a phone in just a few seconds.

If we’re thinking about AI and photography, though, Adobe is a key player. For my own entertainment, I create daily images that celebrate special events as found on the Holidays Calendar website. Tuesday, 7 April 2024, was National Coffee Cake Day. The coffee cake image I found was vertical, but I use only horizontal images. I liked the image, but would have been unable to use it previously.

If we’re thinking about AI and photography, though, Adobe is a key player. For my own entertainment, I create daily images that celebrate special events as found on the Holidays Calendar website. Tuesday, 7 April 2024, was National Coffee Cake Day. The coffee cake image I found was vertical, but I use only horizontal images. I liked the image, but would have been unable to use it previously.

With Adobe Photoshop’s new AI features, I was able to extend the image a bit on the left and considerably on the right. The left extension filled in part of a wall, extended a napkin and fork, and completed the table. The process on the right was more complicated, finishing the plate, table, and a window; but it also added an object on the table. An additional content-aware AI function removed the object. I needed only to add the descriptive text and date.

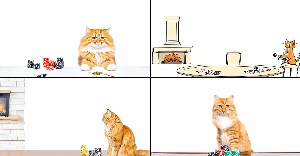

But even Adobe isn’t perfect. At least not yet. I provided both Microsoft Image Creator and Adobe Photoshop (Beta) with Firefly the same text prompt to use in creating an image: “Long hair striped orange cat sitting like a human on a highly polished mahogany table and playing poker in front of a fireplace.” To say that Adobe’s results were shockingly disappointing is an understatement. One of the images was more of a cartoon and the other three weren’t much better.

But even Adobe isn’t perfect. At least not yet. I provided both Microsoft Image Creator and Adobe Photoshop (Beta) with Firefly the same text prompt to use in creating an image: “Long hair striped orange cat sitting like a human on a highly polished mahogany table and playing poker in front of a fireplace.” To say that Adobe’s results were shockingly disappointing is an understatement. One of the images was more of a cartoon and the other three weren’t much better.

One of the cats was sitting at the table, not on it. Two of the cats were on a table, but only one had part of a fireplace in the background.

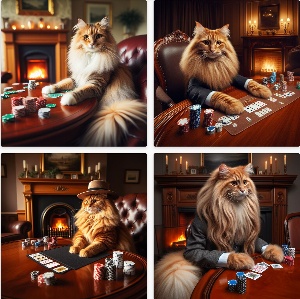

Microsoft’s results were far better, but all of the cats were sitting in chairs instead of on the table. Clearly they were all artistic renderings, but the fur, eyes, paws and backgrounds (with a fireplace) were surprisingly realistic. Frighteningly realistic, truth be told. These are the kinds of images that far too many people seem to think are realistic and pass them off as actual photographs of real cats.

Microsoft’s results were far better, but all of the cats were sitting in chairs instead of on the table. Clearly they were all artistic renderings, but the fur, eyes, paws and backgrounds (with a fireplace) were surprisingly realistic. Frighteningly realistic, truth be told. These are the kinds of images that far too many people seem to think are realistic and pass them off as actual photographs of real cats.

AI is also being used for motion pictures, both new and old. Computer graphics have made a great leap forward. In one of the new Doctor Who episodes starring Ncuti Gatwa as the Doctor, some of the characters are babies who appear to be speaking.

But some of the most remarkable AI effects are being used to enhance hundred-year-old films. Writing in Wired, Matt Simon describes how a 12-minute film from 14 April 1906 showing Market Street in San Francisco has been improved using AI. See the original and the updated version on YouTube. Even the sound has been enhanced and you may notice that San Francisco drivers were even worse in 1906 than they are today.

If you’re familiar with San Francisco’s history, you’ll know that an earthquake and fire destroyed much of the city just four days after the film was made.

Those who remember the low-quality images from the moon in the 1960s and 1970s will marvel at enhanced videos, for example the lunar rover being operated on the moon in 1972.

The article asks the obvious question: Is this honest and ethical? Colorization of old black and white films has been controversial since it began and upsampling low resolution images and motion pictures adds information that wasn’t there. But simply scanning old films to digital video without making any intentional changes subtly modifies the original. It would be wrong to use digital enhancements to “prove” that something happened or didn’t happen, but the technology can make these old images more accessible to a modern audience, and I see nothing wrong with that so long as the originals are also preserved.

Let’s take a look at some of the other current and upcoming AI offerings in Short Circuits.

Navigation tools such as Waze do a good job most of the time. Sometimes they recognize problems on the intended route and direct you around it. But they could be better if they took other factors into account.

The first time Waze tried to steer me around I problem, I thought it had gone crazy. “Why does it want me to go that way, I said. This way is more direct.” A mile later, traffic slowed and then stopped because of a crash. Waze also seems to learn. Even when I know exactly how to get where I’m going, I usually activate it so that it will warn about problems along the route.

Sometimes my way is not the Waze way, though. Often, when I’ve gone my way two or three times, Waze recognizes my route and defaults to that instead of recalculating. Google owns Waze and I wonder how much longer it will be offered. Presumably somebody at Google wonders why they’re offering two apps that perform essentially the same function.

Gemini could fundamentally change the way Maps works by considering weather, events, and other factors that would have a bearing on the time required to make a trip. It should also be possible for users to give the system more explicit commands with information about additional stops along the way and specific routes to take or to avoid.

Pixel 8 Pro users can call on Gemini Nano to compose answers to text messages. I haven’t yet given this a try because I’m inclined not to use AI to write for me. Theoretically I could speak a response to a message and then tell Nano to shorten it or make it more formal. Currently it works only in Google messages using Google’s keyboard (GBoard), not Facebook Messenger, and only with the Pixel 8 Pro. It’s expected to be made available on the Pixel 8 in coming months.

To activate this on a Pixel 8 Pro, go to Settings > About phone. Then scroll to the bottom of the list where the software build number is shown. The secret handshake, if you haven’t previously enabled Developer Mode, involves tapping the build number 7 times and then entering your phone’s PIN. Then navigate to Settings > System > Developer options and scroll down to AICore Settings. Tap this and make sure the Enable AICore Persistent toggle is on.

The Recorder app has been able to provide a transcript of recorded conversations for a while, but now there’s a new Summarize button that, as the name suggests, summarizes the conversation. Because it’s a Nano function, the processing happens on your phone and the text is not passed to a cloud-based function.

This is not related to maps as all, but it is amusing and useful. QR codes can be boring, but a special QR Code Generator can use AI to create one with some custom art.

This is not related to maps as all, but it is amusing and useful. QR codes can be boring, but a special QR Code Generator can use AI to create one with some custom art.

Give it a text prompt such as “New York City skyline at sunset” or upload an image, then fill in a few details to guide the process in your quest for a perfect QR code. Wait a minute or so, and you will have a non-boring QR code. The one shown here goes to the TechByter main page.

In fact, the Desktop shouldn’t have been the last place I might expect to find AI. It’s perfectly logical, given Microsoft’s push to place AI everywhere. But it’s not Microsoft that’s putting AI on the desktop. It’s Stardock.

As I mentioned last December, I thought Stardock had been a one-hit wonder 30 years ago, but then I found WindowBlinds 11, an updated version of the application offered back then. Stardock combines several utilities in a package called Object Desktop for $40 per year. In May, the company added Stardock GPT, which is now in beta, to Object Desktop.

Click any small image for a full-size view. To dismiss the larger image, press ESC or tap outside the image.

Schrödinger’s cat is a thought experiment proposed by Austrian physicist Erwin Schrödinger in 1935 to illustrate the concept of superposition in quantum mechanics. In the experiment, a cat is placed in a sealed box along with a radioactive atom, a Geiger counter, and a vial of poison. If the Geiger counter detects that the atom has decayed, it triggers the release of the poison, which would kill the cat.

According to quantum mechanics, before the box is opened and the cat’s state is observed, the cat exists in a superposition of being both alive and dead at the same time. This is because the state of the radioactive atom is also in a superposition of decayed and not decayed until it is observed.

The paradox of Schrödinger’s cat highlights the strange and counterintuitive nature of quantum mechanics, where particles can exist in multiple states simultaneously until they are observed or measured. The experiment is often used to illustrate the concept of quantum superposition and the role of observation in determining the outcome of a quantum system.

It uses OpenAI’s services to bring AI models to the desktop and offers GPT-3.5 Turbo, GPT-4, and GPT-4 Turbo. Submissions or queries to OpenAI incur a cost that is tracked by the usage of tokens. The multiplier for GPT-3.5 Turbo is 1, 2.5 for GPT-4 Turbo, and 5 for GPT-4. Stardock says most users will find GPT-3.5 Turbo adequate for most uses. Those who sign up for Stardock GPT at no additional fee will receive 10,000 tokens per month. So a question that costs 500 tokens on GPT-3.5 Turbo would cost 1250 tokens on GPT-4 Turbo and 2500 tokens on GPT-4. Additional tokens will be available for purchase and the current advertised price is $5 for 50,000 tokens.

Stardock is evaluating token usage during the beta period and plans to update the weightings to better reflect usage of the API. Tokens are non-refundable and users must have at least 2000 tokens available to submit a question because both the prompt and the answer incur token usage.

Stardock is evaluating token usage during the beta period and plans to update the weightings to better reflect usage of the API. Tokens are non-refundable and users must have at least 2000 tokens available to submit a question because both the prompt and the answer incur token usage.

I asked the system to explain Schrödinger’s cat. Using GPT 3.5 Turbo, the interaction cost only a few hundred tokens.

Stardock says when a prompt or query is submitted to any of the various models using DesktopGPT, Stardock will track usage of the tokens that are linked to the account. The data that is submitted to OpenAI does pass through Stardock’s servers to track token usage, but the prompt and answer are encrypted and Stardock does not log or retain information that is submitted.

Stardock says when a prompt or query is submitted to any of the various models using DesktopGPT, Stardock will track usage of the tokens that are linked to the account. The data that is submitted to OpenAI does pass through Stardock’s servers to track token usage, but the prompt and answer are encrypted and Stardock does not log or retain information that is submitted.

For more information or to download the Stardock GPT beta, visit Stardock’s website.

TechByter Worldwide is no longer in production, but TechByter Notes is a series of brief, occasional, unscheduled, technology notes published via Substack. All TechByter Worldwide subscribers have been transferred to TechByter Notes. If you’re new here and you’d like to view the new service or subscribe to it, you can do that here: TechByter Notes.